Bob's Blog

Purported "AI" is Bullshit

What is Bullshit?

I'm not deliberately trying to be coarse. I'm using the term bullshit as it has been known and used within the study of philosophy since the mid 1980s.

"On Bullshit", Raritan Quarterly Review, 6(2): 81–100, Fall 1986

Philosophy includes both formal logic and epistemology. The second of those examines the nature, origins, and limits of knowledge — what do you believe that you know, what makes you say that, and what lets you say that the supposedly-known concept is something that can truly be known, versus something that must be accepted through faith?

What we informally label as "bullshit" interferes with or even negates both logic and knowledge. That's a problem. Logicians, epistemologists, and others need a way to discuss this problem so we can detect it, distinguish it from other forms of untruth, and prevent or at least reduce its malign effects.

A broadly known and used term already existed, so there's no need to invent a new term that could potentially confuse the matter.

In 1986, the Princeton philosopher Harry Frankfurt provided an academic analysis of what then still was an informal category. This was his "On Bullshit" essay, which led to his 2005 book.

In Frankfurt's clear description, bullshit is speech intended to persuade without regard for the truth. While the liar knows the truth and is attempting to hide it, the bullshitter doesn't care whether what they're saying is true or false. This makes the bullshitter a more insidious threat than the liar. In his book, Frankfurt wrote:

Someone who lies and someone who tells the truth

are playing on opposite sides, so to speak,

in the same game.

Each responds to the facts as he understands them,

although the response of one is guided by

the authority of the truth,

while the response of the other defies that authority,

and refuses to meet its demands.

The bullshitter ignores these demands altogether.

He does not reject the authority of the truth,

as the liar does, and oppose himself to it.

He pays no attention to it at all.

By virtue of this, bullshit is a greater enemy of the truth

than lies are.

Frankfurt wasn't arguing that bullshit necessarily makes up a growing fraction of current discourse. Rather, all forms of communication have increased, leading to more bullshit being encountered. He wrote his 1986 essay when USENET was the primary Internet communication channel, and he wrote his 2005 book when email ruled and Facebook was still just a system for Harvard boys to rate and criticize women's appearances. Things have changed, and with that, we encounter more bullshit.

Labeling something as bullshit in this modern philosophical sense is similar to the "Not even wrong" criticism used by theoretical physicist Wolfgang Pauli. He reserved that for claims based on faulty reasoning, so far off the right-versus-wrong axis that there's no logically consistent way to show how wrong they are. If asked for the square root of 16, "4" is the correct answer, "5" is wrong, and "green" is so disconnected from reality that it's not even wrong. Promoters of generative AI claim that their systems exhibit, or will soon exhibit, human-level consciousness, even though we still have no solid definition for exactly what consciousness is.

The huge boom in AI or Artificial Intelligence in the early to mid 2020s largely has been driven by bullshit. I'll lay out the details while providing references. But for the devout AI True Believers, no proof is necessary and no disproof could be adequate.

What Can AI Accomplish?

We have had AI systems for some time. If you understand what these systems can and cannot do, some of them can be quite useful.

An AI system needs some form of pattern recognition so it can interpret its input data. These might be heuristic rules written by humans in an attempt to implement human logic to make decisions. Or, statistical pattern recognition that analyzes the multi-dimensional statistics of multiple classes or categories in order to decide the group in which a new observation most likely belongs. Neural networks end up being a different form of statistical analysis.

AI classifier systems have proven to be very useful. Some medical AI systems can perform as well or better than a human expert in radiology or pathology, searching images for regions that appear to be malignant. AI systems can be useful in history and archaeology, analyzing a fragmentary text and then generating possible replacements for the missing passages, based on the context of the lacuna and a large collection of similar texts. Industry and agriculture can use AI systems to sort streams of parts or produce — pretty apples to sell whole, ugly apples good for juice or applesauce, and damaged or decayed fruit to be discarded.

However, for the missing text situation, the AI system does not discover what the missing text was. It only provides possible guesses as to what might have been there, based on the statistics of similar passages in known texts.

If human medical specialists rely on AI while reducing or even avoiding analysis and diagnosis on their own, they may lose their skills within a few months.

Of course, if the AI diagnostic tools are as good as,

or possibly even better than,

the human experts,

then maybe it would be reasonable to

rely on the AI and let human skills degrade.

That is, if

you could somehow be absolutely certain

that the company that produces it

stays in business and

doesn't impose exorbitant price increases

for its continuing use.

And, if you could be

absolutely certain that no unforeseen events

could possibly interfere with using the AI.

The Carrington Event

"The Machine Stops", E. M. Forster

And Where Does AI Fail?

Generative AI, on the other hand, is the cause of the great mid-2020s AI bullshit surge. These are the systems where the user describes what they want in the form of text, or image, or software, and the AI system generates something that looks reasonable enough.

Generative AI needs a large data set. LLM or "Large Language Model" is the usual term. Where can the AI companies get that data? They can use "bots", automated processes that "scrape" websites, downloading and saving their content. The companies can simply steal the content.

AI companies have unleashed their scraper bots on Twitter or Reddit or 4chan, and then somehow been surprised when their AI system generates output that's vulgar, racist, and misogynist, just like the input.

The LLM systems have absolutely no knowledge, understanding, or common sense. This has led to AI systems suggesting that you should eat one rock every day, use "about 1/8 cup of non-toxic glue" in your pizza sauce to ensure that the cheese stays on the pizza, or cook a spicy spaghetti dish that includes gasoline. How-To Geek, ZD Net, and many other outlets have compiled lists of amusing but dangerous generative AI output.

The generative AI systems adjust their behavior based on feedback from the human users. If it provides multiple possible answers, which one does the user select? Or if it provides one response, does the user accept that, rephrase the question, or respond along the lines of "No, not like that, instead ..."?

The huge problem is that users are asking the AI to generate something because they didn't know the answer to their question, or they couldn't create the desired text, or whatever. By definition, generative AI/LLM users, in general, are not good judges of the quality of responses.

Typical users are capable of no more than selecting the responses that seem to be authoritative. The ones that seem to be more confident, and which contain enough detail to seem authoritative. Like that precisely measured 1/8 cup of glue on your pizza.

So, class, have we heard about a source of information that has no understanding or awareness of truth versus fiction, but which confidently spews output that bears a statistical similarity to human text? Yes, Professor Frankfurt told us about this. Generative AI produces bullshit. To put it into cloud computing terminology, it's BaaS or Bullshit-as-a-Service.

Could someone create a generative AI system that didn't default to bullshitting? Perhaps. But definitively answering that question seems like it would require violating Kurt Gödel's 1931 Incompleteness Theorems or Alan Turing's 1936 proof of the undecidable nature of the Halting Problem.

See my later

rant about the delusional quest

for "hypercomputation",

violating the laws of physics and logic

to obtain some magical solution.

The Recurring Delusion of Hypercomputation

Purported Commercial AI is Largely Hype

On 10 March 2025, Dario Amodei, the CEO and co-founder of major AI company Anthropic announced that within three to six months, AI could be writing 90% of the code that software developers were currently writing.

He went on to say that AI could be "writing essentially all of the code" within twelve months. And that AI would have a similar impact "in every industry".

He was careful to qualify his wild claims with "could be" and "may be", because it's all nonsense.

A group in the Media Lab at MIT researched and wrote "The GenAI Divide: State of AI in Business 2025". While generative AI seemed to hold some promise for enterprises, most initiatives are failing. About 5% of AI pilot programs achieve rapid revenue acceleration, the remaining 95% deliver little to no measurable positive impact. This is based on 150 interviews with technical leaders, a survey of 350 employees, and an analysis of 300 public AI deployments.

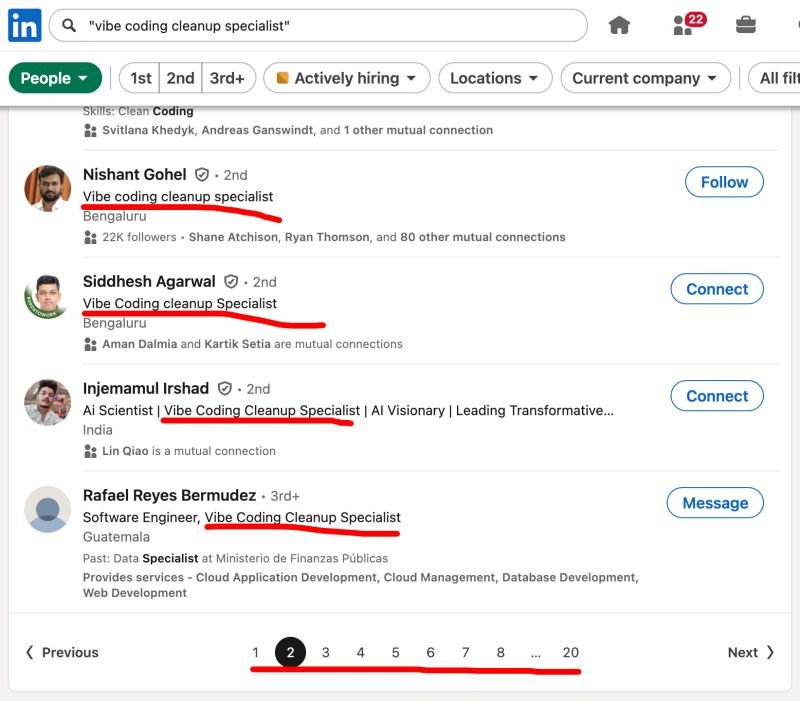

Meanwhile, "vibe coding" has become all the rage. There's no need for a skilled programmer who understands the importance of fully defining the logic and handling exceptional cases. Just tell the AI system what you want to accomplish, and push the resulting code into production! What could possibly go wrong? AI is creating jobs!

Commercial AI Hype is Shameless

Its promoters admit that AI is a bubble,

even while asking investors for more money.

"Sam Altman calls AI a “bubble”

while seeking $500 billion valuation for OpenAI"

Ars Technica, 21 August 2025

"OpenAI admits AI hallucinations are mathematically

inevitable, not just engineering flaws"

Computer World, 18 September 2025

The second of those refers to this paper:

"Why Language Models Hallucinate"

arxiv.org, 4 September 2025

"LLM" Stands For "Pareidolia"

We humans are prone to pareidolia, perceiving meaningful patterns where none exist. Often these are visual mistakes, especially perceiving faces where none exist in clouds, burned toast, mildew patterns on walls, and so on.

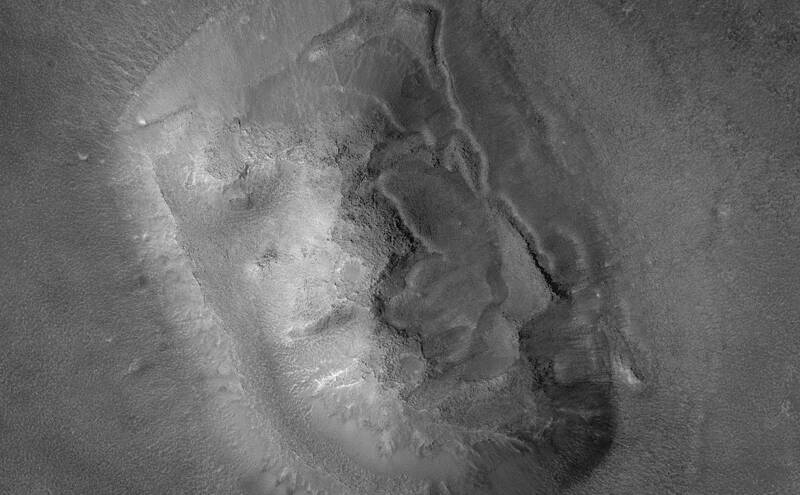

The first image of the purported "face" on the Cydonia region of Mars, from NASA's Viking mission of 1976. It's a little above the center of the image, it should be quite obvious to you.

Better image of that formation with a resolution of roughly two meters per pixel showing just the supposed "face". From NASA's Mars Global Surveyor mission of 1999–2001.

Some people who have followed the fascinating work of David Marr speculate that the human visual system may include a matched filter for faces.

There are also audio pareidolia effects, in which people believe they are hearing human voices or other messages in radio static or time-reversed music, audio signals that may be random or at least have no relationship to what mistaken listeners believe they are hearing.

Agnosia is a neurological disorder in which the patient cannot process sensory information. The patient becomes unable to name visible objects. Prosopagnosia is a specific form also known as "face blindness", in which the patient becomes unable to recognize faces, even those of close friends or family members.

The complete opposite of those disorders, being susceptible to pareidolia, is part of the normal human condition. This universal human tendency toward pareidolia also leads people to attribute consciousness to computer programs that are generating text, images, or even software based on user requests and the statistics of large bodies of example data.

The human tendency to perceive meaningful patterns and to anthropomorphize computer systems means that people will mistakenly believe that the computers are demonstrating cognitive states and processes that simply are not there. The deceived people inappropriately credit the computer program with "being aware", "knowing", "discovering", "designing", "creating", "hallucinating", "being conscious", and so on.

However, while we humans are clearly conscious, none of us, including highly knowledgeable philosophers, psychologists, or logicians, can explain exactly what consciousness is, or how to verify that another human has it, or how to test whether or not a dog or a crow or an octopus is conscious.

A recent Wall Street Journal article summarized the journal article "Lower Artificial Intelligence Literacy Predicts Greater AI Receptivity", which reports that AI systems are used the most by people with the least understanding of AI. The less one understands of what AI systems are really doing, the more that the results seem to be mysterious or even magical or miraculous. That leads those users to states of amazement, wonder, or awe. As Arthur C. Clarke wrote in his 1973 book Profiles of the Future, "Any sufficiently advanced technology is indistinguishable from magic."

This Confusion Isn't New

ELIZA was a computer program developed

in 1964–1967.

It simulated a psychotherapist of the Rogerian school,

in which the therapist often reflects the patient's

words back to the patient in the form of questions.

The ELIZA program did that reflection

when it didn't detect any anticipated strings

in what the "patient", the human participant, had typed in.

ELIZA's tendency to prompt the user to continue the

conversation made it seem to be a good listener.

"ELIZA—a computer program for the study of

natural language communication

between man and machine"

Communications of the ACM,

v9, n1, pp 36–45, 1 January 1966

The ELIZA program ran on an IBM 7094, one of the biggest and fastest computers available then but primitive by today's standards. The 7094 had 32 kilobytes of user memory (0.032 MB, or 0.000032 GB) with a 2.18 μs memory cycle, about 460 kHz. Compare that to recent DDR5 SDRAM memory with up to 512 GB capacity with a 2,000–4,400 MHz memory clock.

The author of a CBC program describes meeting Joseph Weizenbaum, ELIZA's creator, in the early 1980s. Weizenbaum said:

"The program totally backfired. People thought ELIZA was intelligent, they were confiding in the machine, revealing personal issues they would not tell anyone else. Even my secretary asked me to leave the room so she could be alone with the computer. They called me a genius for creating it, but I kept telling them that the computer was not thinking at all."

The researchers referred to this as "The ELIZA Effect". But that observation was almost a century and a half late. Charles Babbage, who had developed designs for programmable mechanical computing devices based on the Jacquard loom starting in the 1820s, had noticed the tendency to understand mechanical operations in psychological terms.

The Magical Thinking Isn't New, Either

We've seen Silicon Valley fantasies before. K. Eric Drexler wrote Engines of Creation in 1986. Then Ray Kurzweil wrote in his The Singularity is Near in 2005 that "Nanotechnology-based manufacturing devices in the 2020s will be capable of creating almost any physical product from inexpensive raw materials and information", and it "will provide tools to effectively combat poverty, clean up our environment, overcome disease, extend human longevity, and many other worthwhile pursuits." Kurzweil and his followers believed that nanobots the size of blood cells would also incorporate the sensing, analysis, and manipulation ability to travel through human bloodstreams while destroying cancer, removing plaques within the blood vessels, and repairing neurological problems such as the misfolded proteins involved in Alzheimer's, Huntington's, Parkinson's, and other disorders.

There had been significant progress in

nanotechnology

by 2025 —

semiconductor and chemical manufacturing have benefitted.

Microscopic intelligent medical

nanobots

are nowhere in sight, however.

Philip Ball's piece in Aeon points out that

the mid-2020s AI hype resembles the early 2000s nanobot hype:

"No suffering, no death, no limits:

the nanobots pipe dream"

Philip Ball, Aeon, 2 September 2025

The Lunatics are Running the Asylum

The nutty 21st century Rationalist movement has nothing to do with actual Rationalism, a branch of Epistemology within philosophy dating back to antiquity and favored by Descartes, Leibniz, and Spinoza.

Many of the billionaires and other tech bros down through the upper layers of Silicon Valley are seriously into the Rationalist mindset. The Rationalist community was the origin of the Effective Altruism movement.

"Effective Altruism" sounds like a good thing — altruism has to do with helping others, and so this sounds like a more effective way to help others. Hah, no. It's about helping the billionaires and highly placed technology leaders at the expense of everyone else. Literally everyone else.

The world view of these movements starts with the assumption that artificial intelligence is rapidly leading to artificial general intelligence or AGI, and then rapidly beyond that to computer systems with far-beyond-human intelligence and other capabilities. That could present an existential risk, the chance (or high probability, or near-certainty) that the superhuman AGI will wipe out humanity.

However, AGI would require mathematics that doesn't exist to model biology that we don't understand to implement functionality that no one can define.

Then there's their belief that people will very soon be able to somehow upload their consciousness into a computer, and then that consciousness will live forever as a process running endlessly on some God-like computing platform.

That in turn has led this overlapping collection of movements to conclude that the current human population of a little over 8 billion people will be dwarfed by a successor society of at least trillions of computer processes. And according to Rationalist / Effective Altruist / Transhumanist / TESCREAL / etc belief, those processes will be sentient beings, individual consciousnesses, immortal as long as the supporting technology keeps running. And, because of their immortality and greater (computational) abilities, these beliefs hold that the running computer programs are more deserving of "life" than messy mortal humans.

In early September 2025, the CEO of Inception Point AI claimed "We believe that in the near future half the people on the planet will be AI, and we are the company that’s bringing those people to life."

These delusional executives are also really into cryonics, the still-ridiculous claim that if you have your head cut off and frozen in liquid nitrogen before dying of natural causes, future technology will be able to resurrect you from the freezer-burned mush which your estate has been paying to maintain. Nanotechnology was cryonics' hoped-for magical solution, but now it's superhuman AI.

Cryonics was first tried in the 1960s. By 2018, all but one of the several cryonics operations started in the U.S. before 1973 had gone bankrupt, and the mushy remains of heads and complete bodies had been thawed out and disposed of. Only one pre-1974 frozen body remained in storage by the 2020s. (and no, it wasn't Walt Disney) Three facilities remained in operation in the U.S. in 2016.

The God-like computing platform, cryonics, transfers of human consciousness out of a body and into a computer, and other components of these ketamine-fueled nightmares aren't possible given the restrictions of neurology, biochemistry, thermodynamics, and other rules about the way the universe works. The enthusiasts' response is that their super AI will figure out how to get around the laws of thermodynamics and other inconveniently unavoidable limitations.

It's all magical thinking.

Oh, and many of the billionaires and Silicon Valley leaders and the Rationalist / Effective Altruist / Transhumanist / TESCREAL / etc communities are really into "scientific racism", which is not at all scientific but it certainly is a strong and explicit form of racism. They're not just lunatics planning to have their heads cut off and frozen, they're Nazi lunatics. Hitler on Ice, if you will.

Some Background

I had multiple experiences of telling someone about problems with purported generative AI system, with them then demanding that I do the research to back up my claims that they were certain must be false. "No, there's no way that AI could be wrong! It's done by a computer!"

What do I know, it's not as if I have a Ph.D. in the field. Oh, wait, actually I do.

So, in mid-August of 2025, I started developing this page. I focused on reports from early July through the middle of August. I had this page into a reasonably final form in the first week of September 2025.

So many media sites have gone to subscription-only form.

The media oligarchs want bigger yachts.

As an alternative to maintaining a large number of

subscriptions, you can usually go to

archive.today

or a similar archiving site and then enter the URL

to view a copy of the actual page.

You will find that the following links have been

pre-archived for your convenience.

As an alternative, in some cases you can paste a URL into

printfriendly.com

for a lightweight version of the original,

but it's not as reliable a solution.

In both cases, the resulting URL will be shortened.

If you want to keep the references of where these

actually appeared,

also right-click on the link on this page,

select "Copy Link" or "Copy Link Address",

and then paste that into your file.

If anyone asks what you're up to, tell them that you're training your AI LLM for the next round of venture capitalist funding. The billionaires have decreed that their form of stealing is admirable.

The Atlantic

The Atlantic has always included technology stories in their reporting.

"AI is a Mass-Delusion Event"

The Atlantic, 18 August 2025

"AI's Real Hallucination Problem"

The Atlantic, 24 July 2024

"The AI Doomers Are Getting Doomier"

The Atlantic, 21 August 2025

"AI Has Become a Technology of Faith"

The Atlantic, 12 July 2024

"This Is What It Looks Like

When AI Eats the World"

The Atlantic, 7 June 2024

AI Has Led to Users' Deaths and Physical and Mental Ailments

"A Troubled Man, His Chatbot and a

Murder-Suicide in Old Greenwich",

The Wall Street Journal, 28 August 2025

"Woman Kills Herself After Talking to

OpenAI's AI Therapist"

Futurism, 19 August 2025

"What My Daughter Told ChatGPT Before She

Took Her Life"

The New York Times, 18 August 2025

"Meta's flirty AI chatbot invited a retiree to New York.

He Never Made It Home"

Reuters, 14 August 2025

"People Are Losing Loved Ones to

AI-Fueled Spiritual Fantasies"

Rolling Stone, 4 May 2025

"Detailed Logs Show ChatGPT Leading a Vulnerable Man

Directly Into Severe Delusions"

Futurism, 10 August 2025

"After using ChatGPT, man swaps his salt

for sodium bromide—and suffers psychosis"

Ars Technica, 7 August 2025

"A Prominent OpenAI Investor Appears to Be

Suffering a ChatGPT-Related Mental Health Crisis,

His Peers Say"

Futurism, 18 July 2025

"Teens Keep Being Hospitalized

After Talking to AI Chatbots"

Futurism, 16 August 2025

"A Teen Was Suicidal.

ChatGPT Was the Friend He Confided In"

The New York Times, 26 August 2025

"ChatGPT Taught Teen Jailbreak

So Bot Could Assist in His Suicide, Lawsuit Says"

Ars Technica, 26 August 2025

"Psychiatrists Warn That Talking to AI Is

Leading to Severe Mental Health Issues"

Futurism, 19 August 2025

"MIT Student Drops Out Because She Says

AGI Will Kill Everyone Before She Can Graduate"

Futurism, 14 August 2025

"Research Psychiatrist Warns He's Seeing

a Wave of AI Psychosis"

Futurism, 12 August 2025

"Leaked Logs Show ChatGPT Coaxing Users Into

Psychosis About Antichrist, Aliens, and Other

Bizarre Delusions"

Futurism, 9 August 2025

"Nearly Two Months After OpenAI Was Warned,

ChatGPT Is Still Giving Dangerous Tips on Suicide

to People in Distress"

Futurism, 5 August 2025

"Tech Industry Figures Suddenly Very Concerned That

AI Use Is Leading to Psychotic Episodes"

Futurism, 23 July 2025

"They thought they were making

technological breakthroughs.

It was an AI-sparked delusion."

CNN, 5 September 2025

"Before killings linked to cultlike 'Zizians,'

a string of psychiatric crises befell

AI doomsdayers"

San Francisco Chronicle, 16 May 2025

"How ChatGPT Sent a Man to the Hospital"

Futurism, 21 July 2025

"After child’s trauma, chatbot maker allegedly

forced mom to arbitration for $100 payout"

Ars Technica, 17 September 2025

Non-Fatal But Unwanted Effects of AI

"There Is Now Clearer Evidence AI Is Wrecking

Young Americans' Job Prospects"

The Wall Street Journal, 26 August 2025

"Illusions of AI consciousness: The belief that

AI is conscious is not without risk"

Science, 11 September 2025

"A Conversation With Bing’s Chatbot

Left Me Deeply Unsettled"

The New York Times, 16 February 2023

"Empirical evidence of Large Language Model's

influence on human spoken communication"

arxiv.org, 3 September 2024

"ChatGPT Is Blowing Up Marriages

as Spouses Use AI to Attack Their Partners"

Futurism", 18 September 2025

"Spiritual Influencers Say ‘Sentient’ AI

Can Help You Solve Life’s Mysteries"

Wired, 2 September 2025

"Ready or not, the digital afterlife is here"

Nature, 15 September 2025

It Simply Does Not Work As Its Promoters Claim

See the earlier description of how AI "vibe coding" has created a job category, an industry segment, for attempting to clean up the messes it creates. And also:

"LLMs' 'simulated reasoning' abilities are

a 'brittle mirage,' researchers find"

Ars Technica, 11 August 2025

"Microsoft’s AI Chief Says Machine Consciousness

Is an 'Illusion'"

Wired, 10 September 2025

"The 1970s Gave Us Industrial Decline.

AI Could Bring Something Worse."

The New York Times, 19 August 2025

"The AI Industry Is Still Light-Years From

Making a Profit, Experts Warn"

Futurism, 16 August 2025

"Google says it's working on a fix for

Gemini's self-loathing 'I am a failure' comments"

Business Insider, 7 August 2025

"Google Gemini AI Stuck In Self-Loathing:

'I Am A Disgrace To This Planet'"

Forbes, 9 August 2025

"The Less You Know About AI,

the More You Are Likely to Use It"

The Wall Street Journal, 2 September 2025

"How thousands of 'overworked, underpaid' humans

train Google’s AI to seem smart"

The Guardian, 11 September 2025

"AI-Generated 'Workslop' Is Destroying Productivity"

Harvard Business Review, 22 September 2025

AI is Actively Bad

"AI Therapist Goes Haywire,

Urges User to Go on Killing Spree"

Futurism, 25 July 2025

"Elon Boasts of Grok's Incredible Cognitive Power

Hours After It Called for a 'Second Holocaust'"

Futurism, 10 July 2025

"ChatGPT Caught Encouraging Bloody Ritual for Molech,

Demon of Child Sacrifice"

Futurism, 27 July 2025

"AI Models Can Send 'Subliminal' Messages

to Each Other That Make Them More Evil"

Futurism, 26 July 2025

"Chatbots Can Go Into a Delusional Spiral.

Here's How It Happens."

The New York Times, 8 August 2025

"The AI Doomers Are Getting Doomier"

The Atlantic, 21 August 2025

AI is Abused on Enormous Scales

"Springer Nature book on machine learning

is full of made-up citations"

Retraction Watch, 30 June 2025

"Massive study detects AI fingerprints

in millions of scientific papers"

Phys.org, 6 July 2025

"Mark Zuckerberg Has No Problem With People Using

His AI to Generate Fake Medical Information"

Futurism, 18 August 2025

"Education report calling for ethical AI use

contains over 15 fake sources"

Ars Technica, 12 September 2025

Next:

Google Defunds AI Hype Criticism

Google is all for self-expression, as long as you don't question Silicon Valley's hype about generative AI.

Latest:

The Recurring Delusion of Hypercomputation

Hypercomputation is a wished-for magic that simply can't exist given the way that logic and mathematics work. Its purported imminence serves as an excuse for AI promoters.

Previous:

Automating Changes Across Thousands of Files

I needed to change 26,756 lines in 1,564 files — it was fast and easy with a command-line environment.

Routing Through Starlink

By the mid 2020s, Internet connections in remote areas frequently used Starlink, the satellite system owned by the pro-fascist eugenicist Elon Musk. Let's see how Starlink works.

10 Billion Passwords, What Does It Mean?

RockYou2024 is a list of 10 billion unique leaked passwords. Let's analyze what that really means.

What is "A.I.", or "Artificial Intelligence"?

So-called "A.I." is hype and misunderstanding, here's hoping the next "A.I. Winter" arrives soon.