Nginx, OpenSSL, and Quantum-Safe Cryptography

Secure Web Service with Nginx, OpenSSL, and Quantum-Safe Cryptography

Step-by-step notes for setting up a web server running

the latest protocols and cryptography for the best

performance and security.

We will use Nginx

for the HTTP/HTTPS service,

OpenSSL for the

cryptographic infrastructure,

and the liboqs Open Quantum Safe

library to "future-proof" your server

against quantum computing breakthroughs with what's called

post-quantum cryptography

or quantum-resistant cryptography.

I'll set up SNI or

name-based virtual hosting,

to host multiple sites on one server.

I'll configure the Nginx cryptography and protocols

to get a 95% or A+

rating from Qualys.

And, I'll set up HTTP security headers to follow

best practice.

A current operating system should provide most of

what you need, except for an OQS-ready version of

the Nginx web server —

I'll show you how to build that from source,

along with the OQS pieces if you need that.

Like many of my technical pages,

this serves as an aide-mémoire for me.

I figured all this out once,

and this helps when I need to return to it.

You will see that I have simply included blocks of my

heavily commented nginx.conf file

with some added explanations.

I find this useful,

and I hope that you will, too.

This page is long, and it's easy to lose track of

where you are and where we're headed.

This navigation menu will reappear throughout this

page with the current section highlighted.

You Will Need Certificates

You might want to start by getting your certificates lined up.

See my pages for how to get, and automatically renew,

free TLS certificates from Let's Encrypt.

How to Use Let's Encrypt ECC and RSA TLS Certificates

OpenSSL

OpenSSL provides the cryptographic framework.

It includes the

openssl

and

s_client

commands (along with many others),

plus a large shared library of cryptographic functions

that other programs can use.

You need OpenSSL 3.* to support Open Quantum Safe. Version 3.0.0 released on 7 September 2021, so your operating system should include it. If not, check your package management system to see if it includes extra packages with newer versions of some utilities, or if it can reference an added package repository as with the EPEL collection for Red Hat, Oracle Linux, etc.

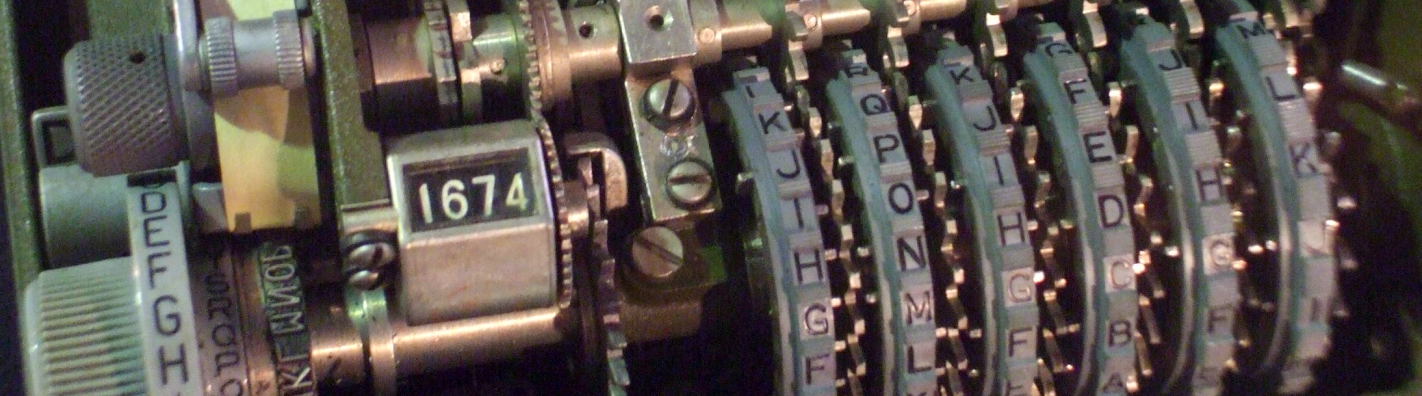

Wait, "Quantum-Safe"? "Post-Quantum"?

We usually use hybrid cryptography to protect information — symmetric cryptography to protect the data itself, after having used asymmetric cryptography to protect the authentication and the session keys.

When establishing a connection, the end points use asymmetric cryptography, sometimes called public-key cryptography, first to authenticate, and then to establish a shared session key.

The shared session key will be used by the symmetric cipher that will protect the data. It will be an ephemeral session key, meaning that it will be randomly generated and then used only for this one connection session. If you re-connect and transfer the same data, a new unique ephemeral key would be generated.

The symmetric cipher will typically be AES, or possibly the ChaCha20/Poly1305 stream cipher which can be used with TLS.

An asymmetric cipher is based on a "trapdoor" function. That's a problem that is enormously, impractically more difficult to do in one direction than in the other. We can safely conclude that there is no practical solution, but it would be quite easy to test whether or not a proposed solution is correct.

How RSA WorksThe factoring problem has been a traditional trapdoor function used for session key agreement. The security of the RSA algorithm is based on the difficulty of factoring the product of two large prime numbers. How large? For a 4096-bit RSA key, two prime numbers of about 616 digits each, multiplied together to form a product of 1,232 digits. The 4,096-bit product is the public key, and the two roughly 2,048-bit prime numbers are the private key.

How Elliptic-CurveCryptography Works

The classic Diffie-Hellman scheme from the 1970s uses the discrete logarithm problem as its trapdoor. For elliptic-curve cryptography or ECC, the trapdoor function is the related elliptic-curve discrete logarithm problem.

Unfortunately, the factoring and discrete logarithm problems could be quickly solved by a quantum computer with enough stable qubits running Shor's algorithm. That would break either of those trapdoor functions, exposing the session keys and allowing an attacker to decrypt the ciphertext.

Richard Feynman suggested quantum computing in 1981, and when Peter Shor published his algorithm in 1994, the cryptographic world realized that quantum computing would be a threat.

Researchers began working on what has been variously called post-quantum, quantum-safe, and quantum-resistant cryptography. The PQCrypto conference series began running in 2006. The goal is the development of asymmetric algorithms unbreakable with Shor's algorithm. Or, ideally, unbreakable through quantum computing in general.

The Open Quantum Safe project (GitHub page here) provides open-source software implementing the quantum-resistant algorithms we have so far. The U.S. National Institute of Standards and Technology has led research projects, workshops, conferences, and competitions for PQC, selecting algorithms to become standards.

Grover's algorithm was published just two years after Shor's. It is useful in an attack against a symmetric cipher. But it is not nearly the threat that Shor's algorithm poses to our traditional asymmetric ciphers. 256-bit AES would be reduced to 128-bit strength against a large, stable quantum computer running Grover's algorithm. That's not as strong as the pre-quantum case, but it's still pretty strong.

U.S. NIST Finalized Post-Quantum Algorithms in August 2024

I initially worked on this in late 2023, and then revisited it in the spring of 2024. I got a customized version of Nginx running the quantum-safe cryptography, but it was a complicated and tedious setup involving draft releases and ambiguous algorithm names. The submissions had names chosen by the research and development teams, including "Kyber" and "Dilithium" to reference fictional crystals from the Star Wars and Star Trek universes. Disappointingly, no Stargate-watching cryptographers submitted a "Naqadah" cipher. The development names were used with and without numbers referencing key sizes, and with varying capitalization.

Then U.S. NIST made a final selection of post-quantum algorithms in August 2024. A few months later, more of the components were ready to go, at least on FreeBSD. And, once both Chrome and Firefox began supporting NIST-defined standards, there was much more of a point to this! I was reminded to return to this in March, 2025, when NIST selected HQC as a backup choice for key encapsulation for general-purpose encryption.

"NIST finalizes trio of post-quantum

encryption standards"

The Register

FIPS 203: Module-Lattice-Based Key-Encapsulation

Mechanism Standard

FIPS 204: Module-Lattice-Based

Digital Signature Standard

FIPS 205: Stateless Hash-Based

Digital Signature Standard

NIST Frequently-Asked Questions on

Post-Quantum Cryptography

"NIST Selects HQC as Fifth Algorithm for Post-Quantum Encryption"

Slight confusion lingers. A component project is named oqs-provider, but its shared library and configuration strings omit the dash.

The initial selection in August 2024 included one Key-Encapsulation Mechanism or KEM, a replacement for trapdoor functions in asymmetric cryptography, and two new digital signature standards, new ways to prove message integrity and sender authentication. The new KEM and one of the signature methods are based on module-lattice problems.

How Lattice Problems WorkI'm using MathJax to do math within HTML.

A lattice is an infinite set of points in \(n\)-dimensional space \(ℝ^n\) such that coordinate-wise addition or subtraction of two lattice points produces another point in the lattice. See my page on lattice problems as used in PQC for more details.

Lattice problems are a class of optimization problems related to geometric or group-theoretic aspects of lattices. Some are thought to be NP-hard, meaning that if P ≠ NP as we suspect, there would be no polynomial-time algorithms to solve them.

Learning With Errors is a category of lattice problem that could be used in cryptography. It represents secret information as a set of equations with errors — the actual value of the secret is hidden by the noise added to the representation. It's the problem of inferring the linear \(n\)-ary function \(f\) over a finite ring from provided samples $$ y_i = f(x_i), $$ some of which are erroneous. The errors, the deviation from equality, follow some known noise model. The problem comes down to finding the function \(f\) or some close approximation with high probability.

Learning With Errors or LWE was introduced in 2005, and brought its author the 2018 Gödel Prize for outstanding computer science work. Like RSA with small primes or elliptic-curve cryptography over a small field, a simplified LWE scenario makes the concepts fairly easy to grasp. But then the practical implementations enormously enlarge the search space, making it extremely difficult to find a solution.

Lattice problem — Wikipedia Learning with errors — Wikipedia Lattice-based cryptography — Wikipedia Kyber / ML-KEM — Wikipedia "Prepping for post-quantum: a beginner’s guide to lattice cryptography" — Cloudflare

The keys used with quantum-safe algorithms are much larger than those for ECC algorithms thought to be of equal strength. ML-KEM-768 uses 2400-byte private keys and 1184-byte public keys. Those sizes are in bytes and not bits. However, the ML-KEM-768 runtime is only about 2.3 times that of ECC with X25519.

So, a hybrid algorithm is used, combining an ECC cipher with a quantum-safe cipher. The combination is not either-or, it is and. The session key is encrypted by one of the two, and the result is encrypted by the other. Decryption at the far end must "unwrap" the encryption in the opposite order.

This way, if a general-purpose many-qubit quantum computer appears tomorrow, ECC with X25519 is broken but hybrid key agreement is still protected by ML-KEM-768.

On the other hand, if, despite all the careful analysis done so far, ML-KEM-768 is found to be flawed, hybrid key agreement is still protected by ECC with X25519. And, a second, backup post-quantum algorithm has been tentatively selected.

liboqs — Open Quantum Safe

liboqs FreeBSD

liboqs is the Open Quantum Safe

shared library.

You need this library.

On FreeBSD, which this server runs,

liboqs is available as a package.

That package includes the shared library file

/usr/local/lib/liboqs.so

and also the files /usr/local/include/oqs/*.h

needed to compile programs which will use that library.

If you need to compile your own liboqs library, see the project's GitHub page for full details.

oqs-provider — Open Quantum Safe

Starting with major version 3,

OpenSSL supports "providers",

modules for adding capabilities like FIPS compliance

and post-quantum algorithms.

The shared library file

oqsprovider.so

adds post-quantum algorithms to OpenSSL.

At least while I'm writing this,

we have to build the Nginx web server from source

so it will be linked to use the liboqs.so

shared library.

When you build Nginx,

it uses the OpenSSL source tree to include the

libssl.a OpenSSL library

into the Nginx binary as a static library.

So, we'll also set up a version of OpenSSL

with the post-quantum provider.

On FreeBSD, the provider library is in:

/usr/local/lib/ossl-modules/oqsprovider.so

I have some notes on building oqs-provider on FreeBSD. For Linux, also see the Open Quantum Safe oqs-provider page.

Compile Nginx With liboqs

I first did this March, 2025. Since then I have updated the core FreeBSD OS and the added OpenSSL packages.

I started by installing an additional pre-compiled OpenSSL

package.

FreeBSD comes with OpenSSL in the base system,

and offers newer versions as additional packages that will

install in /usr/local/.

With FreeBSD 14.2 with the openssl34

package added, I see:

$ pkg search openssl3 # what named "*openssl3*" is available? openssl31-3.1.8 TLSv1.3 capable SSL and crypto library openssl31-quictls-3.1.7_1 QUIC capable fork of OpenSSL openssl32-3.2.4 TLSv1.3 capable SSL and crypto library openssl33-3.3.3 TLSv1.3 capable SSL and crypto library openssl34-3.4.1 TLSv1.3 capable SSL and crypto library $ pkg info | grep openssl # what named "*openssl*" is installed? openssl-oqsprovider-0.8.0_1 quantum-resistant cryptography provider for OpenSSL openssl34-3.4.1 TLSv1.3 capable SSL and crypto library $ pkg info | grep oqs liboqs-0.12.0 C library for quantum-resistant cryptography openssl-oqsprovider-0.8.0_1 quantum-resistant cryptography provider for OpenSSL $ which openssl /usr/bin/openssl $ /usr/bin/openssl version # version installed with base OS OpenSSL 3.0.16 11 Feb 2025 (Library: OpenSSL 3.0.16 11 Feb 2025) $ /usr/local/bin/openssl version # version of added package OpenSSL 3.4.1 11 Feb 2025 (Library: OpenSSL 3.4.1 11 Feb 2025)Download Nginx Source Code Download OpenSSL Source Code

Download the source code archive files for both Nginx and OpenSSL, choosing the same OpenSSL version as the additional updated package. For me, that's 3.4.1. Verify the PGP signatures to make sure you got the real code from the real projects.

We won't directly use the OpenSSL source code tree,

but the Nginx compilation uses it to build and embed

libssl.a as a static library within

the nginx binary.

The configuration file

/usr/local/openssl/openssl.cnf

from the added OpenSSL 3.4.1 package

will be compatible with the OpenSSL 3.4.1 library routines

compiled into Nginx.

Extract both archives. I did this in my home directory:

$ tar xf nginx-1.26.3.tar.gz $ tar xf openssl-3.4.1.tar.gz

Plan your build.

I put my custom-built Nginx under

/usr/local/nginx-x.y.z

so I could have multiple custom-built versions of Nginx

installed at the same time,

plus the standard non-PQC version available

as a package.

Then /usr/local/nginx can be a symbolic link

pointing to whichever version I want to be using by default.

The directory contains files and subdirectories so that,

among other pieces:

conf/nginx.conf is the configuration file

html/ is the default web root

sbin/nginx is the executable

ssl_dhparam is a crypto component

Accelerate the software compilation,

if you can.

My server is a FreeBSD system running in the

Google Cloud Platform.

Running dmesg immediately after booting

reports this about the CPU and memory:

[... Lines omitted ...] CPU: AMD EPYC 7B12 (2250.13-MHz K8-class CPU) Origin="AuthenticAMD" Id=0x830f10 Family=0x17 Model=0x31 Stepping=0 Features=0x1783fbff<FPU,VME,DE,PSE,TSC,MSR,PAE,MCE,CX8,APIC,SEP,MTRR,PGE,MCA,CMOV,PAT,PSE36,MMX,FXSR,SSE,SSE2,HTT> Features2=0xfef83203<SSE3,PCLMULQDQ,SSSE3,FMA,CX16,SSE4.1,SSE4.2,x2APIC,MOVBE,POPCNT,AESNI,XSAVE,OSXSAVE,AVX,F16C,RDRAND,HV> AMD Features=0x2e500800<SYSCALL,NX,MMX+,FFXSR,Page1GB,RDTSCP,LM> AMD Features2=0x4003f3<LAHF,CMP,CR8,ABM,SSE4A,MAS,Prefetch,OSVW,Topology> Structured Extended Features=0x219c01ab<FSGSBASE,TSCADJ,BMI1,AVX2,SMEP,BMI2,RDSEED,ADX,SMAP,CLFLUSHOPT,CLWB,SHA> Structured Extended Features2=0x400004<UMIP,RDPID> XSAVE Features=0x7<XSAVEOPT,XSAVEC,XINUSE> AMD Extended Feature Extensions ID EBX=0x10cd005<CLZERO,XSaveErPtr,IBPB,IBRS,STIBP,PREFER_IBRS,SAMEMODE_IBRS,SSBD> TSC: P-state invariant Hypervisor: Origin = "KVMKVMKVM" real memory = 1073741824 (1024 MB) avail memory = 1003302912 (956 MB) Event timer "LAPIC" quality 600 ACPI APIC Table: <Google GOOGAPIC> FreeBSD/SMP: Multiprocessor System Detected: 2 CPUs FreeBSD/SMP: 1 package(s) x 1 core(s) x 2 hardware threads random: registering fast source Intel Secure Key RNG random: fast provider: "Intel Secure Key RNG" random: unblocking device. ioapic0 <Version 1.1> irqs 0-23 [... For some reason the platform emulates an NTSC color-burst crystal ...] Timecounter "ACPI-fast" frequency 3579545 Hz quality 900 acpi_timer0: <24-bit timer at 3.579545MHz> port 0xb008-0xb00b on acpi0 [... Many more lines omitted ...]

With one CPU that supports hyperthreading,

you might think that the

-j 2

option to the make command

would make the builds run faster.

Yes, usually that would be the case —

use a parameter that is the number of CPU cores,

twice that if they're hyperthreaded.

However, my server is on the Google Cloud platform,

at the Free Tier level.

The CPU and 1 GB of RAM is free,

I only have to pay for egress or outbound traffic.

The Free Tier allows

just a short burst of full CPU utilization

and then throttles it to half the possible maximum.

That is, a short burst at 200% then back to

100% of one CPU, 50% of the platform's capacity.

Compiling takes longer, but for me the price is right.

Here's what the Google Cloud console showed me

about CPU utilization while

make -j 2

had been running for almost 50 minutes to build OpenSSL:

Advanced topic: Consider using Poudriere to create and test this as a FreeBSD package.

Configure your Nginx build.

Tell it how to build the binary,

where to find the

liboqs components

and the OpenSSL source code,

and where to install itself.

Change /home/cromwell to wherever you have

the OpenSSL source tree,

and change the Nginx and OpenSSL version numbers as needed.

Notice the added --with-cc-opt

and --with-ld-opt parameters,

needed to use the liboqs library.

$ tar xf nginx-1.26.3.tar.gz

$ cd nginx-1.26.3

$ export LD_LIBRARY_PATH=/usr/local/lib:/lib:/usr/lib

$ ./configure --prefix=/usr/local/nginx-1.26.3 \

--with-http_ssl_module \

--with-http_v2_module \

--with-http_v3_module \

--with-http_gunzip_module \

--with-http_gzip_static_module \

--with-http_sub_module \

--with-threads \

--with-openssl=/home/cromwell/openssl-3.4.1 \

--with-cc-opt="-I /usr/local/include/oqs"

[... Narrative output follows...]

We also need to add something to one line in a Makefile.

Due to a quirk in sed in FreeBSD and macOS,

using sed for an in-place edit fails here.

Use sed to make a new file,

then move it into place over the old one.

$ sed 's/libcrypto.a/libcrypto.a -loqs/' objs/Makefile > /tmp/Makefile $ mv /tmp/Makefile objs/Makefile

Now build Nginx.

$ make [... much output, about 50 minutes of compiling ...] $ sudo make install [... asked for root password, a little output ...]

I'll set up symbolic links pointing to my configuration

and content, change the details as needed.

First, the generic nginx location:

# cd /usr/local # rm -f nginx # ln -s nginx-1.26.3 nginx

Now the components within that:

# cd /usr/local/nginx # mv html html-original # ln -s /home/cromwell/www html # ln -s /home/cromwell/www/nginx/ssl_dhparam ssl_dhparam # rm conf/nginx.conf # ln -s /home/cromwell/www/nginx/nginx.conf conf/nginx.conf

Enable oqs-provider in OpenSSL

and the newly built Nginx.

To do this, merge the contents of

oqsprovider.cnf

into

openssl.cnf

as you're instructed while installing

the oqsprovider package.

$ cat /usr/local/openssl/oqsprovider.cnf # Replace the existing [provider_sect] and [default_provider] sections # with this config [provider_sect] default = default_sect oqsprovider = oqsprovider_sect [default_sect] activate = 1 [oqsprovider_sect] activate = 1 module = /usr/local/lib/ossl-modules/oqsprovider.so

Now you could, for example:

$ /usr/local/bin/openssl s_client -curves X25519MLKEM768 -connect cromwell-intl.com:443

We built nginx

with the --with-openssl parameter,

so we must set an environment variable for it when

we're using any quantum-safe algorithms from liboqs:

# /usr/local/nginx/sbin/nginx -t

nginx: [emerg] SSL_CTX_set1_curves_list("p521_mlkem1024:p384_mlkem1024:x448_mlkem768:X25519MLKEM768:SecP256r1MLKEM768:mlkem1024:mlkem768:mlkem512:X448:X25519:secp521r1:secp384r1") failed

nginx: configuration file /usr/local/nginx-1.26.3/conf/nginx.conf test failed

-- but --

# export OPENSSL_CONF=/usr/local/openssl/openssl.cnf

# /usr/local/nginx/sbin/nginx -t

nginx: the configuration file /usr/local/nginx-1.26.3/conf/nginx.conf syntax is ok

nginx: configuration file /usr/local/nginx-1.26.3/conf/nginx.conf test is successful

So, my /etc/rc.local gets an added line

to set the variable and

start my custom-built nginx:

$ cat /etc/rc.local #!/bin/sh # Save dmesg at each boot: dmesg > /var/log/dmesg # Start custom-built nginx with liboqs: OPENSSL_CONF=/usr/local/openssl/openssl.cnf /usr/local/nginx/sbin/nginx

Now I have a version of nginx

capable of post-quantum cryptography!

And so, I need to figure out how to use PQC...

Which Key Exchange and Cipher Algorithms Should I Support?

For some time I have had my server supporting just TLSv1.3 and TLSv1.2, with TLSv1.2 supporting only the more secure cipher suites. Analyzing the logs for all of 2024 plus the first two months of 2025, I saw the following for TLS versions, key exchange, and cipher suites:

number percentage version KEX cipher suite

6,400,816 66.875412% TLSv1.3 X25519 TLS_AES_256_GCM_SHA384

2,289,691 23.921864% TLSv1.2 secp521r1 ECDHE-ECDSA-AES256-GCM-SHA384

329,829 3.445934% TLSv1.2 X25519 ECDHE-ECDSA-AES256-GCM-SHA384

230,163 2.404660% TLSv1.3 secp384r1 TLS_AES_256_GCM_SHA384

196,147 2.049273% TLSv1.3 secp521r1 TLS_AES_256_GCM_SHA384

51,701 0.540153% TLSv1.2 X448 ECDHE-ECDSA-AES256-GCM-SHA384

27,757 0.289995% TLSv1.2 secp521r1 ECDHE-ECDSA-AES128-GCM-SHA256

27,620 0.288564% TLSv1.3 X25519 TLS_CHACHA20_POLY1305_SHA256

8,185 0.085514% TLSv1.2 secp384r1 ECDHE-ECDSA-AES256-GCM-SHA384

3,372 0.035229% TLSv1.3 secp384r1 TLS_CHACHA20_POLY1305_SHA256

1,760 0.018388% TLSv1.2 secp521r1 ECDHE-RSA-AES128-GCM-SHA256

1,682 0.017573% TLSv1.2 secp384r1 ECDHE-ECDSA-CHACHA20-POLY1305

1,682 0.017573% TLSv1.2 X25519 ECDHE-ECDSA-CHACHA20-POLY1305

376 0.003928% TLSv1.2 secp521r1 ECDHE-RSA-AES256-GCM-SHA384

251 0.002622% TLSv1.2 X448 ECDHE-ECDSA-CHACHA20-POLY1305

176 0.001839% TLSv1.2 secp384r1 ECDHE-ECDSA-AES128-GCM-SHA256

143 0.001494% TLSv1.2 secp384r1 ECDHE-RSA-CHACHA20-POLY1305

76 0.000794% TLSv1.2 4096 bit DH DHE-RSA-AES256-GCM-SHA384

39 0.000407% TLSv1.2 secp384r1 ECDHE-RSA-AES256-GCM-SHA384

21 0.000219% TLSv1.2 secp521r1 ECDHE-ECDSA-CHACHA20-POLY1305

15 0.000157% TLSv1.2 X448 ECDHE-RSA-AES256-GCM-SHA384

14 0.000146% TLSv1.3 X448 TLS_AES_256_GCM_SHA384

11 0.000115% TLSv1.2 secp384r1 ECDHE-RSA-AES128-GCM-SHA256

8 0.000084% TLSv1.3 X25519 TLS_AES_128_GCM_SHA256

3 0.000031% TLSv1.2 X25519 ECDHE-RSA-CHACHA20-POLY1305

1 0.000010% TLSv1.2 secp521r1 ECDHE-RSA-CHACHA20-POLY1305

1 0.000010% TLSv1.2 X25519 ECDHE-RSA-AES256-GCM-SHA384

1 0.000010% TLSv1.2 X25519 ECDHE-ECDSA-AES128-GCM-SHA256

Obviously, some of these protocol/KEX/cipher combinations are only used by research bots inventorying what's supported across the Internet. I was most surprised to see how dominant ECC or elliptic-curve cryptography is for key exchange — 99.999206% ECC versus just 0.000794% classic Diffie-Hellman with exponentiation and modulo.

Nginx and HTTP

HTTP/1 was finalized in 1996, and updated as 1.1 in 1997. HTTP/2 came out in 2015, and HTTP/3 in 2022. Of course we prefer the latest and greatest as they have better performance and capabilities. But we also want to support the older versions still used by web indexing crawlers. And, unfortunately, also used by bots looking for vulnerable servers. During February and March 2025, when I had solid HTTP/3 support, I saw these request counts:

$ cat *access.log | awk '{print $8}' |

sed 's/".*//' | sort | uniq -c

3560 0.888% HTTP/1.0

268397 66.932% HTTP/1.1

113500 28.304% HTTP/2.0

15540 3.875% HTTP/3.0

The ancient HTTP/1.0 requests are seemingly from 1996-1997! About a third of those are from 52.22.66.203, the W3C validators. I'll assume that the rest are bots, research projects, and buggy clients.

I'm surprised by HTTP/1.1 being two-thirds of the requests! But it seems that search engine indexing bots and Mastodon nodes tend to run HTTP/1.1. I need to support HTTP/2 for performance, and HTTP/3 for even better performance, but I also must support HTTP/1.

HTTP/3 runs on top of QUIC, "Quick UDP Internet Connection".

That uses UDP to roughly simulate TCP,

with much faster connection setup

and a solution to the

TCP "head-of-line blocking" problem.

QUIC runs on UDP port 443,

so make sure to also open that on the firewall

before proceeding.

You can test your HTTP/3 / QUIC service at

http3check.net,

and learn about HTTP/3 at

SmashingMagazine.com.

Part 1: Core Concepts

Part 2: Performance Improvements

Part 3: Practical Deployment Options

The nginx.conf file has

the following overall structure.

My server is hosting

cromwell-intl.com,

toilet-guru.com,

and two other sites.

The following works on Nginx 1.25.0 and later,

that was the first release of Nginx to support QUIC and HTTP/3.

See the following sections for content and discussion of the

shared http settings

and the server { ... } blocks.

Starting here, I will use

greyed-out text to

refer to omitted content,

italic text for comments, and

bold text for configuration content.

# Let the server start one worker process per CPU core,

# allow many connections per worker.

worker_processes auto;

events {

worker_connections 1024;

}

http {

[... Define everything that can be shared by all sites ...]

server {

listen 80;

server_name cromwell-intl.com www.cromwell-intl.com;

[... Where and what to log ...]

[... Redirect to HTTPS on port 443 ...]

}

server {

listen 80;

server_name toilet-guru.com www.toilet-guru.com;

[... Where and what to log ...]

[... Redirect to HTTPS on port 443 ...]

}

[... Two other server { listen 80 } blocks ...]

server {

listen 443 quic;

listen 443 ssl;

http2 on;

server_name _;

[... This is an intentionally bogus definition. Modern browsers

will ignore it because "_" doesn't match a hostname. See

the below explanation. ...]

}

server {

listen 443 quic reuseport;

listen 443 ssl;

http2 on;

server_name cromwell-intl.com www.cromwell-intl.com

[... HTTPS details for that site ...]

}

server {

listen 443 quic reuseport;

listen 443 ssl;

http2 on;

server_name toilet-guru.com toilet-guru-intl.com

[... HTTPS details for that site ...]

}

[... Two other server HTTPS blocks ...]

}

Why the Bogus "_" Block?

A web server can host multiple web sites, each with a unique "web root" location. All of the DNS names for the various sites can resolve to the same IP address. That's happening on this server.

A modern browser supporting the

SNI or Server Name Indication

extension to TLS specifies the server name in its request,

and the server uses the corresponding

server { ... } block.

However, requests from extremely old browsers without SNI

(IE on Windows XP, for example)

would simply use

the first server block,

getting the first certificate listed there.

If the request is for any site other than the first one defined,

that would imply information about relationships

between sites and organizations.

Those ancient browser clients couldn't make a TLS connection

because they require outdated SSL/TLS versions

that this server doesn't support.

However, tools like the

Qualys scanner

simulate non-SNI browsers.

So, it also retrieves the first server block.

Its certificate will be indicated with

"No SNI" and no details shown by default.

With or without this block, the Qualys report will show "This site works only in browsers with SNI support" in the summary at the top. That's good, as browsers without SNI support are horribly outdated.

The full server block for the bogus "_" site

looks like the following.

The RSA private key and certificate are for

example.com,

generated and self-signed on my server,

because the whole point of this

is to be syntactically valid but entirely meaningless.

[... earlier server { listen 80 } blocks deleted ...] server { # Must omit 'reuseport' within this bogus 'server { ... }' block only. # But in the other HTTPS server blocks it must be used as: # listen 443 quic reuseport; listen 443 quic; listen 443 ssl; http2 on; server_name _; ssl_certificate /usr/local/etc/letsencrypt/rsa-live/example.com/FakeSite_RSA.crt; ssl_certificate_key /usr/local/etc/letsencrypt/rsa-live/example.com/FakeSite_RSA.key; # Return a 418 status code indicating that the server will not respond # to an unwanted request from a non-SNI browser. See: # https://developer.mozilla.org/en-US/docs/Web/HTTP/Status/418 return 418; } [... following blocks deleted ...]

The Initial "Shared by all sites" Section

This section is within the outermost

http {...} block,

before any of the

server {...} blocks.

It sets what's shared by all sites,

including the complex and crucial specifications

for cryptography, both key agreement and data encryption:

- Basic logistics about data types, default pages, the error page, log format.

- TLS versions and tuning.

-

ssl_ciphersspecifying the symmetric cipher suites protecting the data. -

Key agreement algorithms specifying asymmetric

algorithms protecting the keys:

-

ssl_ecdh_curvelisting elliptic curves used with ECDHE or Elliptic-Curve Diffie-Hellman Ephemeral, and/or Key Encapsulation Mechanisms or KEMs using quantum-safe algorithms -

Plus,

ssl_dhparamfor the now seldom-used classic Diffie-Hellman Ephemeral key agreement.

-

- Compression and TCP tuning parameters.

Here's the resulting initial section:

[...Opening lines with worker_processes and events not shown...] http { ########################################################################### # Start by defining everything that can be shared by all sites. # Look for "Context: http" for that directive in: # https://nginx.org/en/docs/dirindex.html ########################################################################### include mime.types; default_type application/octet-stream; index Index.html index.html; error_page 404 /ssi/404page.html; # For general log variables see: # http://nginx.org/en/docs/http/ngx_http_log_module.html#log_format # For SSL-specific log variables see: # http://nginx.org/en/docs/http/ngx_http_ssl_module.html#variables # This is basically the default plus the last three, which I added # to capture statistics on client use of protocols and crypto. log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '$ssl_protocol $ssl_curve $ssl_cipher'; ########################################################################### ## Cryptography and TLS ########################################################################### # TLS versions 1.2 and 1.3 only, preferring 1.3. ssl_protocols TLSv1.3 TLSv1.2; # Set aside 1 MB of memory to cache TLS connection details so a # subsequent page load can start immediately. That's enough for # about 4,000 sessions. ssl_session_cache shared:SSL:1m; # SSL session cache timeout defaults to 5 minutes, 1 minute should # be plenty. This is abused by advertisers like Google and Facebook, # long timeouts like theirs will look suspicious. See, for example: # https://www.zdnet.com/article/advertisers-can-track-users-across-the-internet-via-tls-session-resumption/ ssl_session_timeout 1m; # Specify the cipher suites. Guidance is available here: # https://ssl-config.mozilla.org/ # https://wiki.mozilla.org/Security/Server_Side_TLS # # My resulting list, all of which include authenticated encryption: # Cipher: Used by: # ECDHE-ECDSA-AES256-GCM-SHA384 TLSv1.3 # ECDHE-ECDSA-CHACHA20-POLY1305 " # ECDHE-ECDSA-AES128-GCM-SHA256 " # ECDHE-RSA-AES256-GCM-SHA384 TLSv1.2 # ECDHE-RSA-CHACHA20-POLY1305 " # ECDHE-RSA-AES128-GCM-SHA256 " # DHE-RSA-AES256-GCM-SHA384 " # DHE-RSA-CHACHA20-POLY1305 " # # Unfortunately, unlike Apache, the ssl_ciphers list has to be # one enormously long line. # ssl_ciphers ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-RSA-CHACHA20-POLY1305:ECDHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384:DHE-RSA-CHACHA20-POLY1305; # Have the server prefer them in that order: ssl_prefer_server_ciphers on; # Not all clients have processors with the AES-NI extension. # AES is faster for clients with those processors, but ChaCha20 is # 3 times faster for processors without the special instructions. # Tell the server to prioritize ChaCha20, which means that if the # client reports ChaCha20 as its most-preferred cipher, use it; # otherwise the server preference wins. ssl_conf_command Options PrioritizeChaCha; # Elliptic curves for traditional ECDHE: prefer x448 and Ed25519 curves # first, NIST curves later. Speculation remains about NSA backdoors in # NIST curves. Chrome and derivatives dropped secp521r1 when it wasn't # listed in NSA's Suite B list. Indexing bots *.search.msn.com will be # about the only clients using secp384r1. Few clients support X448. # # As for the quantum-safe algorithms in the Open Quantum Safe suite, # these are just a few because nginx has a limit on the parameter length. # To see what liboqs and oqsprovider offer: # $ openssl list -key-exchange-algorithms # $ openssl list -kem-algorithms # The OQS algorithms as named within liboqs are listed here: # https://github.com/open-quantum-safe/oqs-provider/blob/main/ALGORITHMS.md # # Submission NIST liboqs NIST # name name name Security Level # Kyber512 ML-KEM-512 mlkem512 1 ≈AES 128 # Kyber768 ML-KEM-768 mlkem768 2 ≈AES 192 # Kyber1024 ML-KEM-1024 mlkem1024 3 ≈AES 256 # # Several choices are hybrid, meaning that it uses both a post-quantum # key exchange algorithm along with a traditional elliptic curve. So, # if the new quantum algorithm is broken, we're still reasonably safe. # The hybrid initially named p384_mlkem1024 was renamed SecP384r1MLKEM1024 # around 30 June 2025, with openssl-oqsprovider-0.9.0. See: # https://www.ietf.org/archive/id/draft-kwiatkowski-tls-ecdhe-mlkem-03.html # https://datatracker.ietf.org/doc/draft-ietf-hpke-pq/ # Also see the output of: # /usr/local/bin/openssl list -kem-algorithms | sort # # Nginx has a parameter length limit, so I have chosen just a few. # Be careful, the nomenclature is much better than it used to be, but # there is still varying capitalization and use of underbar or not. # # You can find the two-byte hex TLS identifier within the Client Hello # packet, within "Extension: supported groups", within "Supported Groups", # although Wireshark won't know what the identifier means. For example, # 0x11ec = X25519 / ML-KEM-768 = X25519MLKEM768 # # I am listing hybrids first: # p521_mlkem1024 # SecP384r1MLKEM1024 # x448_mlkem768 # X25519MLKEM768 # SecP256r1MLKEM768 # then pure quantum-safe KEMs: # mlkem1024 # mlkem768 # mlkem512 # then pure elliptic curves: # X448 # X25519 # secp521r1 # secp384r1 ssl_ecdh_curve p521_mlkem1024:SecP384r1MLKEM1024:x448_mlkem768:X25519MLKEM768:SecP256r1MLKEM768:mlkem1024:mlkem768:mlkem512:X448:X25519:secp521r1:secp384r1; # The file named here contains the predefined DH group ffdhe4096 # recommended by IETF in RFC 7919. Those have been audited, and may # be more resistant to attacks than randomly generated ones. See: # https://wiki.mozilla.org/Security/Server_Side_TLS # https://datatracker.ietf.org/doc/html/rfc7919 # Use the IETF group, don't generate your own with: # $ openssl dhparam 4096 -out /etc/ssl/dhparam.pem ssl_dhparam /usr/local/nginx/ssl_dhparam; ########################################################################### # Compression suggestions from: # https://www.digitalocean.com/community/tutorials/how-to-increase-pagespeed-score-by-changing-your-nginx-configuration-on-ubuntu-16-04 # - Level 5 (of 1-9) is almost as good as 9, and is much less work. # - Compressing very small things may make them larger. # - Compress even for clients connecting via proxies like Cloudflare. # - If client says it can handle compression, but it asks # for uncompressed, send it the compressed version. # - Nginx compresses HTML by default. Tell it about other data types # that could benefit. Image formats such as JPEG, GIF, PNG, etc., # are already compressed, while ICO or image/x-icon is not. ########################################################################### gzip on; gzip_comp_level 5; gzip_min_length 256; gzip_proxied any; gzip_vary on; gzip_types text/plain text/css application/x-font-ttf image/x-icon application/javascript application/x-javascript text/javascript; ########################################################################### # TCP Tuning # # Nagle's algorithm (potentially) adds a 0.2 second delay to every # TCP connection. It made sense in the days of remote keyboard # interaction, but it gets in the way of transferring many files. # Turn on tcp_nodelay to disable Nagle's algorithm. # # FreeBSD man page for tcp(4) says: # TCP_NODELAY Under most circumstances, TCP sends data when it is # presented; when outstanding data has not yet been # acknowledged, it gathers small amounts of output to # be sent in a single packet once an acknowledgement # is received. For a small number of clients, such # as window systems that send a stream of mouse # events which receive no replies, this packetization # may cause significant delays. The boolean option # TCP_NODELAY defeats this algorithm. # # It's on by default, but why not make it explicit: ########################################################################### tcp_nodelay on; ########################################################################### # tcp_nopush blocks data until either it's done or the packet reaches the # MSS, so you more efficiently stream data in larger segments. You can # send a response header and the beginning of a file in one packet, and # generally send a file with full packets. # # FreeBSD man page for tcp(4) says: # TCP_NOPUSH By convention, the sender-TCP will set the "push" # bit, and begin transmission immediately (if # permitted) at the end of every user call to # write(2) or writev(2). When this option is set to # a non-zero value, TCP will delay sending any data # at all until either the socket is closed, or the # internal send buffer is filled. # # This is like the TCP_CORK socket option on Linux. It's only # effective when sendfile is used. ########################################################################### tcp_nopush on; sendfile on; ########################################################################### # Limit how long a keep-alive client connection will stay open at the # server end. ########################################################################### keepalive_timeout 65; ########################################################################### # Enable the use of underscores in client request header fields. ########################################################################### underscores_in_headers on; [... following server { ... } blocks deleted ...] }

How Strict Regarding TLS Versions and Ciphers?

Decide carefully, based on the clients you want, and need, to support. I have a story that illustrates that.

I went to Nashville to teach an introductory cybersecurity class to Tennessee state government employees. It was a very introductory course, one in which the more qualified students were often frustrated by the lack of meaningful hands-on exercises. So I always added what I could. One thing I always did was tell them about the Qualys scanner at ssllabs.com and have them run a scan of some system that they thought needed to be secure. "For example, your bank or some other site where you do financial transactions."

As usual, I strolled to the back of the room and chatted with some of the people sitting back there while we waited for people to get to the correct site and then run a scan that can take two or three minutes.

Looking toward the front of the room, I had never seen this before — bright red F scores were appearing on everyone's screens!

Usually almost everyone scans their bank, and things aren't perfect but they aren't too bad overall. This week, however, it happened that everyone had scanned public-facing state government servers in the departments where they worked. And, the state-wide web server template had serious problems.

Many of them looked terrified and asked me what they should do. I told them that they should tell someone about this. I had no idea as to exactly who they should inform, but someone needs to know about this.

I put my scan of my server up on the projector. We walked through the major differences, with me telling them that they could follow the links in the Qualys scan result for more details. I received very high evaluations that week.

I went back to Nashville two months later. The classes ran in a large state office building with a cafeteria on the ground floor. I would get breakfast there each day, grits and sweet tea.

A guy who had been in the earlier class saw me the first morning and came over to talk. He had really enjoyed the class and gotten a lot out of it. I remembered that he had particularly enjoyed the Qualys scan and been interested in discussing the results. So, I told him that I planned to have them do it again, and hopefully things would work out better. He said that the state IT department had made changes since my last visit. "And, um, let me tell you what else."

On the Monday morning following his course, he set out get to the servers he ran to an A+ score. Within a couple of days, he had. And a few days after that, the state IT department jumped on him.

Their automated scans had detected that his department's servers were no longer adequately backward compatible with old browsers. A state government is obligated to provide services to all residents, at least within reason. But it can't require that all residents immediately upgrade their operating systems to something within the past year or two.

And so, later that week the class did the Qualys scan and everyone testing a state server found an A- score. Things had improved! (But not too much!) I could tell that group about how much things had changed for the better in two months. And, warn them to not get too enthusiastic about locking down their department's servers.

DNS Details for HTTP/3

We're almost to the server { ... } blocks

where we define HTTP redirection and HTTPS service

for multiple web sites.

Finally!

But first, we need to set up some DNS details for

HTTPS over HTTP/3 and QUIC.

I think of my site, and have designed its layout,

with the hostname being simply

cromwell-intl.com,

without the prefixed www

which was popular back in the 1990s.

I understand that you or your browser may assume that

it's www.cromwell-intl.com,

but you will get a DNS response saying

"Um, I see what you want, but the

canonical

name is simply

cromwell-intl.com

and here is the IP address for that."

Trimming some of the debug-level output from the

dig command, we see:

$ dig www.cromwell-intl.com

;; QUESTION SECTION:

;www.cromwell-intl.com. IN A

;; ANSWER SECTION:

www.cromwell-intl.com. 3600 IN CNAME cromwell-intl.com.

cromwell-intl.com. 3600 IN A 35.203.182.32

If your or your application were being obstinate,

and you connected to that IP address and then sent over a

request with the SNI specifying

www.cromwell-intl.com,

your browser or other client application will receive a

HTTP Response Code 301 — Moved Permanently,

redirecting you to the name without www.

That's done with Nginx configuration directives,

as I'll show you in the next section.

Now let's say that your browser or other client software is forward-thinking, and anticipates that my server probably runs HTTPS. The browser should start by requesting an HTTPS record from DNS. I have set this up properly, so here's what it would get back:

$ dig www.cromwell-intl.com HTTPS

;; QUESTION SECTION:

;www.cromwell-intl.com. IN HTTPS

;; ANSWER SECTION:

www.cromwell-intl.com. 3600 IN CNAME cromwell-intl.com.

cromwell-intl.com. 3600 IN HTTPS 1 . alpn="h3,h2" ipv4hint=35.203.182.32

The multi-part answer first explains with CNAME

that the canonical name has no www.

Then the HTTPS record explains that while

the server of course runs HTTPS over HTTP/1,

it also runs HTTPS over HTTP/3 and HTTP/2,

preferring HTTP/3 over all alternatives.

And, since this would obviously be

an immediate follow-up question,

the ipv4hint field announces

the server's IPv4 address.

One server { ... } HTTP Block For Each Site

Now for the server { ... } blocks defining

the web sites!

We start with one server { ... } block

for HTTP for each site.

Later, we will have an HTTPS block for each site.

But first, let's set up what happens if they connect to TCP/80.

The server will be listening for such a connection,

and this example block applies if the client uses SNI to ask

for either cromwell-intl.com

or www.cromwell-intl.com.

The request and any error will be logged, but only the main request for the page. All the images, and CSS style content, and JavaScript, and so on will not be logged.

This site is rooted at

/usr/local/nginx/html/,

but because they connected via cleartext HTTP,

they will be redirected to the corresponding encrypted

HTTPS URL.

And, if their request had the unneeded www,

the redirect will also leave that off.

[... Overall setup: worker_processes, events ...] http { [... shared section used by all sites, explained above ...] ########################################################################### # HTTP Server: Log and redirect to HTTPS. ########################################################################### server { listen 80; server_name cromwell-intl.com www.cromwell-intl.com; access_log /var/www/logs/cromwell-intl-access.log main; error_log /var/www/logs/cromwell-intl-error.log; # Don't log bulky data, even HTTP requests that will be redirected. location ~* \.(jpg|jpeg|png|gif|ico|css|js|ttf)$ { access_log off; } root /usr/local/nginx/html; # Redirect "www.*" requests to no "www" and HTTPS. if ($http_host = www.cromwell-intl.com) { return 301 https://cromwell-intl.com$request_uri; } # Redirect HTTP requests to HTTPS. return 301 https://cromwell-intl.com$request_uri; } [... the same server { ... } for each of the other sites ...] [... more detailed HTTPS setup for each site, explained below ...] }

And that's all it takes! Repeat the above for each site, and then you're ready to continue with the more complex HTTPS blocks.

One server { ... } HTTPS Block For Each Site

We need a server { ... } block for

each site's use of HTTPS, specifying:

- The server runs TLS with HTTP/1 and HTTP/2 over TCP/443, and HTTP/3 over QUIC on UDP/443.

-

Redirection from the name with

www.to without. - Web root location.

- Access and error log locations.

- For bulky static data such as images and CSS, don't log the requests and tell the client to cache the data for up to a week.

- Frustrate attempts to "hotlink" my content, having my server provide images for someone's forum posts.

- There are both ECC and RSA certificates for the site.

- Add several security-related headers to the response.

-

Have the PHP pre-processor run all

<?php ...?>blocks within all*.htmlfiles. - Finally, rewriting rules to redirect the client when they follow links to pages I have renamed or removed.

Here's an HTTPS server { ... } block

that does all that:

# Let the server start one worker process per CPU core,

# allow many connections per worker.

worker_processes auto;

events {

worker_connections 1024;

}

http {

[... Define everything that can be shared by all sites ...]

[... One server { ... } HTTP block for each site ...]

###########################################################################

## HTTPS server block for cromwell-intl.com

###########################################################################

server {

listen 443 quic reuseport;

listen 443 ssl;

http2 on;

server_name cromwell-intl.com www.cromwell-intl.com alt.cromwell-intl.com;

# The additional headers to advertise HTTP/3 over QUIC must be

# within the server { ... } blocks. This is in addition to an

# HTTPS record in DNS, e.g.:

# cromwell-intl.com. 3600 IN HTTPS 1 . alpn="h3,h2" ipv4hint=35.203.182.32

# For more on HTTP/3 and QUIC see:

# https://en.wikipedia.org/wiki/HTTP/3

# https://www.f5.com/glossary/quic-http3

# https://www.cloudflare.com/learning/performance/what-is-http3/

add_header Alt-Svc 'h3=":443";ma=86400, quic=":443";ma=86400';

add_header Alt-Svc 'h2=":443";ma=86400;persist=1';

add_header Alt-Svc 'h2=":443";ma=86400';

# ssl_early_data is susceptible to replay attacks in certain situations.

# However, I'm not doing financial transactions on this server. So,

# for my server this is safe with no further processing. For a good

# explanation, see:

# https://blog.trailofbits.com/2019/03/25/what-application-developers-need-to-know-about-tls-early-data-0rtt/

ssl_early_data on;

quic_retry on;

# Redirect if hostname starts "www."

if ($http_host = www.cromwell-intl.com) {

return 301 https://cromwell-intl.com$request_uri;

}

root /usr/local/nginx/html;

#######################################################################

## Logging and cache control for images, CSS, JavaScript, fonts

#######################################################################

access_log /var/www/logs/cromwell-intl-access.log main;

error_log /var/www/logs/cromwell-intl-error.log;

location ~* \.(jpg|jpeg|png|gif|ico|css|js|ttf)$ {

###############################################################

# Logging

###############################################################

# Don't log bulky data.

# HOWEVER, it seems we get a log entry no matter what

# if the request was referred in by something else. For

# example, fetching an image because of Google search.

access_log off;

###############################################################

# Caching

###############################################################

# Tell client to cache bulky data for 7 days, which is

# the Google Pagespeed recommendation / requirement.

expires 7d;

# "Pragma public" = Now rather outdated, skip it.

# "public" = Cache in browser and any intermediate caches.

# "no-transform" = Caches may not modify my data formats.

add_header Cache-Control "public, no-transform";

# Tell client and intermediate caches to understand that

# compressed and uncompressed versions are equivalent.

# Goes with gzip_vary below. More details here:

# https://blog.stackpath.com/accept-encoding-vary-important

add_header Vary "Accept-Encoding";

###############################################################

# Block image hotlinking. I changed "rewrite" to "return"

# in the description provided here:

# http://nodotcom.org/nginx-image-hotlink-rewrite.html

# Also see:

# http://nginx.org/en/docs/http/ngx_http_referer_module.html

###############################################################

valid_referers none blocked ~\.google\. ~\.printfriendly\. ~\.bing\. ~\.yahoo\. ~\.baidu.com server_names ~($host);

if ($invalid_referer) {

return 301 https://$host/pictures/denied.png;

}

}

# Needed because of above hotlink redirection.

location = /pictures/denied.png { }

#######################################################################

# Certificates and private keys.

# Send both ECC and RSA certificates.

# Generating and renewing Let's Encrypt certificates described here:

# https://cromwell-intl.com/open-source/google-freebsd-tls/tls-certificate.html

#######################################################################

# ECC

ssl_certificate /usr/local/etc/letsencrypt/ecc-live/cromwell-intl.com/fullchain.pem;

ssl_certificate_key /usr/local/etc/letsencrypt/ecc-live/cromwell-intl.com/privkey.pem;

# RSA

ssl_certificate /usr/local/etc/letsencrypt/rsa-live/cromwell-intl.com/fullchain.pem;

ssl_certificate_key /usr/local/etc/letsencrypt/rsa-live/cromwell-intl.com/privkey.pem;

#######################################################################

# Security headers

# Check security headers at:

# https://cspvalidator.org/

# https://report-uri.io/home/analyse

# https://securityheaders.com/

#######################################################################

# HSTS -- HTTP Strict Transport Security

# The HSTS Preloading mechanism uses a list compiled by Google,

# and is used by major browsers such as Chrome, Firefox, Opera,

# Safari, and Edge.

add_header Strict-Transport-Security "max-age=31536000; includeSubDomains; preload";

# Support for OCSP Must-Staple is done in the CSR when the certificate

# is generated. HOWEVER, Let's Encrypt announced in early 2025 that

# OCSP Must-Staple certificate requests would be denied starting in

# May 2025, as they were switching to CRL-only.

# X-Frame-Options

add_header X-Frame-Options "SAMEORIGIN";

# Turn on XSS / Cross-Site Scripting protection in browsers.

# "1" = on,

# "mode=block" = block an attack, don't try to sanitize it

add_header X-Xss-Protection "1; mode=block";

# Tell the browser (Chrome and Explorer, anyway) not to "sniff" the

# content and try to figure it out, but simply use the MIME type

# reported by the server.

# This means that all files named "*.jpg" must be JPEG, and so on!

add_header X-Content-Type-Options "nosniff";

# I set Referrer-Policy liberally. I think referrer info

# can be helpful without being absolutely trustworthy,

# and I don't have any scandalous or sensitive URLs.

# See https://scotthelme.co.uk/a-new-security-header-referrer-policy/

add_header Referrer-Policy "no-referrer-when-downgrade";

# Content Security Policy

# *If* a client could add a stylesheet, then they could drastically

# change visibility and appearance. Also, there is a way to steal

# sensitive data from within a page. See:

# https://www.mike-gualtieri.com/posts/stealing-data-with-css-attack-and-defense

# However, none of my pages have forms or handle sensitive data.

# So, I feel safe using 'unsafe-inline' below.

#

# NOTE that if I didn't do that, I would have to convert every single

# 'style="..."' string to a CSS class instead. The below limits CSS

# to coming from my site and *.googleapis.com and cdn.jsdelivr.net

# while allowing inline 'style="..."', which seems plenty safe for me.

add_header Content-Security-Policy "upgrade-insecure-requests; style-src https://cromwell-intl.com https://alt.cromwell-intl.com https://*.googleapis.com 'unsafe-inline'; frame-ancestors 'self';";

# Permissions Policy, see:

# https://scotthelme.co.uk/goodbye-feature-policy-and-hello-permissions-policy/

add_header Permissions-Policy "fullscreen=(self)";

# As recommended by https://frog.tips

add_header X-Frog-Unsafe "0";

# Include the TLS protocol version and negotiated cipher

# in the HTTP headers, so we can capture them in the logs.

add_header X-HTTPS-Protocol $ssl_protocol;

add_header X-HTTPS-Cipher $ssl_cipher;

add_header X-HTTPS-Curve $ssl_curve;

#######################################################################

# Process all *.html files as PHP. The php-fam service must be

# running, listening on TCP/9000 on localhost only.

# The try_files line serves the 404 error page if they ask for

# a non-existent file. Without that, you get a cryptic error AND

# it causes a security hole, see:

# http://forum.nginx.org/read.php?2,88845,page=3

#######################################################################

location ~ \.html$ {

try_files $uri =404;

include fastcgi_params;

fastcgi_index Index.html;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

fastcgi_param TLS_PROTOCOL $ssl_protocol;

fastcgi_param TLS_CIPHER $ssl_cipher;

fastcgi_param TLS_CURVE $ssl_curve;

fastcgi_param QUERY_STRING $query_string;

fastcgi_param REQUEST_METHOD $request_method;

fastcgi_param CONTENT_TYPE $content_type;

fastcgi_param CONTENT_LENGTH $content_length;

fastcgi_param SCRIPT_NAME $fastcgi_script_name;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

fastcgi_param REQUEST_URI $request_uri;

fastcgi_param DOCUMENT_URI $document_uri;

fastcgi_param DOCUMENT_ROOT $document_root;

fastcgi_param SERVER_PROTOCOL $server_protocol;

fastcgi_param REMOTE_ADDR $remote_addr;

fastcgi_param REMOTE_PORT $remote_port;

fastcgi_param SERVER_ADDR $server_addr;

fastcgi_param SERVER_PORT $server_port;

fastcgi_param SERVER_NAME $server_name;

fastcgi_hide_header X-Powered-By;

fastcgi_pass 127.0.0.1:9000;

}

#######################################################################

# Rewrite rules go here. They're written as:

# rewrite OLD_REGEX NEW permanent;

# That returns with HTTP 301 Permanent Redirect. If you instead use

# "redirect", it returns with HTTP 302 Temporary Redirect.

#######################################################################

[... Rewrite rules ...]

}

[... Server { ... } HTTPS blocks for each of the other sites ...]

}

Quantum-Safe Clients

In Chrome, go to

chrome://flags,

and in Firefox, go to about:config.

Search for kyber and,

if needed, make two changes in each browser.

The Developer tools pane in Chrome has a Privacy and security tab that describes the connection. My sites put the connection protocols and crypto details into the footer on every page. But not everyone does that.

The curl tool can use these post-quantum and hybrid

mechanisms if it is compiled to use a version of

OpenSSL for which you have set up oqsprovider.

What I describe on this page gets Nginx supporting all

the post-quantum and hybrid algorithms supported by

liboqs.so.

Clients will support few to none of those.

In early 2025, Chrome and Firefox supported

hybrid x25519—ML-KEM-768

and that was about it.

If you use Wireshark to capture a TLS v1.3 connection

using PQC, it may fail to decode the two-byte codes for

the algorithms.

You'll find these in the Client Hello packet,

within Supported Groups,

inside Extension: supported_groups.

Refer to the

oqs-provider page

listing the algorithm names and IDs.

Here's what I see for Firefox offering KEX methods.

0x11ec is the hybrid x25519/ML-KEM-768 known in liboqs as

X25519MLKEM768

while 0x001d is plain X25519 and so on.

Results — A+ from Qualys / ssllabs.com

Here's the score, you can check it yourself.

The Qualys report also shows me that the cryptography

restricts clients to reasonably up-to-date operating

systems and browsers.

So, there's no need for ancient CSS markup like

-moz-column-count,

-webkit-column-count,

and so on.

Browsers new enough to handle the required cryptography should also handle CSS 3.

Results — A+ from SecurityHeaders.com

Here's the score, you can check it yourself.

Further Notes

On my site:

What's the Point of Asymmetric Encryption? How Does Asymmetric Cryptography Work? Quantum Computing and Quantum-Safe Cryptography

On other sites:

US NIST Post-Quantum Cryptography Project Cloudflare discussion of PQC (April 2022) Cloudflare Research page to test your browser The SSL Store blog on Google Chrome PQC support (August 2023) Open Quantum Safe Project Open Quantum Safe at Github open-quantum-safe / liboqs open-quantum-safe / oqs-provider oqs-provider algorithm names and IDs Lan Tian's Blog

This project isn't possible on typical GoDaddy hosting, but if you're curious I can show you a way to automate TLS certificate renewal on GoDaddy.